AI Fundamentals: Agent Definitions

What is an Agent? Definitions abound, but can we boil it down to something simpler?

This post is the first in an occasional series I plan to write on fundamental concepts that are useful in understanding AI systems. Given the rapid pace of change in AI, it's easy to lose track of underlying principles, and some terms seem to take on new meanings every week.

We'll start with one of those: Agents.

2025 is apparently the year of the agent. The rapid advances in LLMs have brought us incredible new capabilities in terms of text, image, and even video generation, but the excitement has shifted to systems that go beyond generation and act in the digital or real world.

This excitement is definitely warranted. AI becomes orders of magnitude more useful if it can "take action." We've had many years of software automation already, but much of it has been static and brittle. Infusing this with more agile decision-making will create huge wins. It's also very hard to do well (but we'll get to that later!)

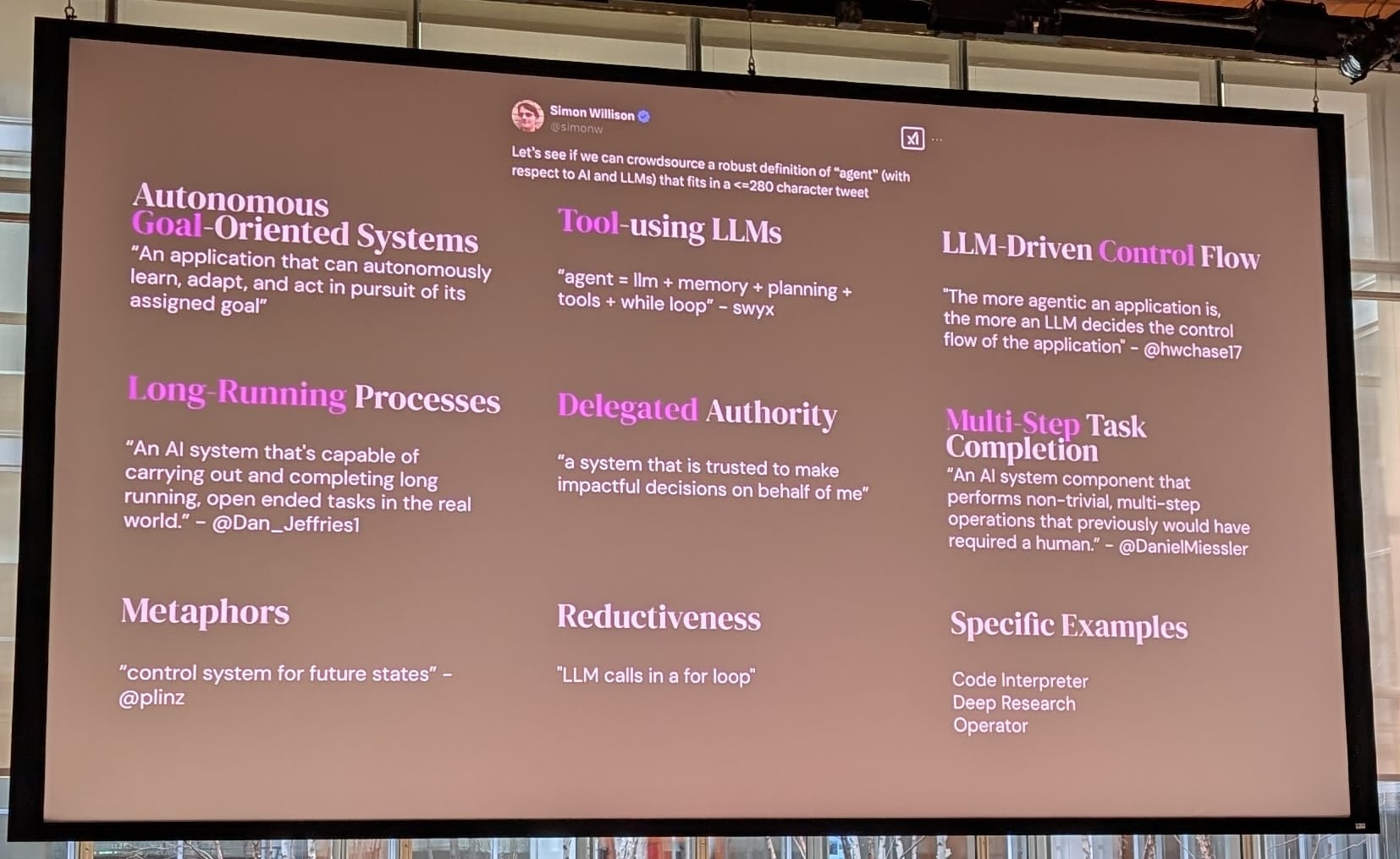

In any case, the natural question is "What exactly is an Agent anyway?" Do we mean the OpenAI definition of an Agent? The IBM definition? One of the six top definitions Simon Willison crowdsourced (courtesy of Swyx at the AI Engineer Conference):

Or something else?

It won't be a surprise to anyone that I think that while each of these definitions gets some things right, they are all somewhat flawed. It also won't be a surprise to learn that plenty of definitions of Agent have been crafted before, and there is a whole research field dedicated to Multi-agent systems. (One of my first major conference publications was a paper at the International Conference on MultiAgent Systems in Boston in 2000, then in its fourth year and which later merged with two other conferences to become the AAMAS conference that still runs today.)

As a bonus, we'll talk about Multi-agent systems at the end.

A walk back in time

A full review of agent definitions would take a long time to write and read, but suffice it to say, there was never a single definition. The types of agents in academic literature included many things:

- Fully embodied robots that explored their environment.

- Simple models of independent actors in a marketplace simulation to predict price dynamics.

- Complex software entities with internal knowledge representations and world models communicating with standardized agent languages. (KQML and FIPA-ACL being the two most widespread examples).

- Swarms of very simple entities that self-organized to perform extremely complex behaviors.

Trying to pin down a definition of "Agent" was a favorite dinner topic at many an AI conference.

Today's definitions

Fast forward to today, the fact that we have an explosion of definitions isn't a huge surprise. We now have a powerful new set of tools to build agents with, and the level of excitement is high. Still, I'd argue quite a lot of mistakes are being made in current definitions that either make them overly complicated or miss the point by a significant margin.

The problem with having multiple divergent definitions is that it can obscure the underlying challenges in building agent systems (and, by extension, the much much harder challenge of building multi-agent systems!).

Pulling on the modern definitions already referenced in this post above (which are amongst the best I've seen in the modern era), here are some of the key elements they mention:

- "An agent is a model equipped with instructions that guide its behavior, access to tools that extend its capabilities, encapsulated in a runtime with a dynamic lifecycle." (Does it need instructions? Does it need to be a model? What actually does it mean to be a model?)

- "An Application that can autonomously learn, adapt, and act in pursuit of its assigned goal." (Is it key to be able to learn? Must a goal be assigned? By whom?)

- "An AI system that is capable of carrying out and completing long-running, open-ended tasks in the real world." (Do the tasks need to be long-running? Do they need to be completed?)

- "Agent = LLM + Memory + Planning + Tools + While Loop." (Do we need memory? Do we really need an LLM? Or planning? What about a FOR loop followed by a graceful retirement?)

- "A system that can be trusted to make impactful decisions on my behalf." (Does it have to be on behalf of a user? What about on its own behalf? Is a human an agent?)

- "The more agentic an application is, the more an LLM decides the control flow of the application." (Does it need to be an LLM? Is agenthood a gradual slope or is a binary state?)

- "An AI System component that performs non-trivial, multi-step operations that previously would have required a human." (Do they have to be that non-trivial? What about tasks that humans could not do in the first place? Is a virus checker an agent?)

The point here is not to be negative about these definitions. The notion of an agent is genuinely hard to define, and all these authors stepped up with an attempt.

In fact, I'd go so far as to say I LOVE each of these definitions because they illustrate aspects of a core essence infused with the current animating technical zeitgeist.

Historical definitions often suffered from a more reductive view of agents as "simple" because it was so hard to imagine software systems that could be as powerful as what could be built today with LLMs. The modern definitions suffer somewhat from the reverse problem, the assumption that you need something as complex as an LLM to build an agent.

What is the notion of "Agent" really about?

It's unlikely any single definition is going to meet everyone's needs, but I'd argue that it's helpful to build on a relatively robust core definition and then extend from there.

The modern definitions have a number of core themes, but most also miss at least one key point that definition that is a recurring fixture of historical definitions (the notion of agents as "embedded" or "situated" in an environment). The modern definitions also (very naturally but unnecessarily) anchor to LLMs as a key element. You really don't need anything as powerful as an LLM to make an agent.

I don't take any credit for this formulation since it's really just a synthesis of historical definitions + infusion from modern definitions, but here is what is arguably the essence.

An Agent is:

A system with the autonomy and ability to act to achieve one or more goals in its environment.

This could be a software system (Software Agent), one might call it an artificial intelligence system (AI Agent) or one could imagine a biological agent (the mouse that just chewed through your electrical wiring).

Breaking down the definition, what is key to the definition:

- We're talking about a single entity (a system).

- It is autonomous in that has the means to choose whether or not to act based on some criteria. (This is the most troublesome part of any agent definition, but in many ways it is a key property as we will see later.)

- It has the ability to act. In other words, it has a set of affordances that can operate in the environment. These could be innate abilities; they could be tools, they could be actions that change the physical world (e.g., destroying power cables) or simply gather information (e.g., an agent spy).

- The agent is situated in an environment in which it operates. The environment could be physical or digital, it could be static or fast-evolving. The environment creates the context in which the Agent does its work.

- The agent has goals it can choose to achieve or not. These goals could be innate (pre-programmed) or be assigned by another agent (human or software), or they could arise dynamically (even randomly or based on some feature of the environment).

In addition to the core definition, one might want to add a couple of other stipulations:

- Most useful agents are probably long-running or durable in some way. I.e. they stick around to do tasks. This isn't strictly necessary as long as they are autonomous and have some kind of identity that makes them an entity. There's no real reason why one could not have "one-shot" agents that disappear once their single task is achieved or even launched.

- Most useful agents probably have some kind of sensing loop that checks the environment for triggers and change. Again, this isn't strictly necessary, one could insert many agents into an environment to try to achieve a goal without waiting to refine their course of action (a brute force denial of service attack doesn't require a lot of sensing.)

Still, even with those additions, there are quite a few things missing from recent definitions: LLMs, While-loops, models, planning, external instructions, learning, memory etc.. All of these things are useful for some types of agents, but it is hard to argue that they are central to the notion of an agent.

To see this, it's worth thinking about what a multi-agent system would look like, which we'll do below.

Before we wrap up this definition, let's return to the notion of "Autonomy" which is the trickiest of the definition items above. What does it mean for a system to be able to act autonomously? Does it mean no human instruction? Does it mean no human triggers? No triggers from other agents?

There is a deep philosophical rabbit hole here that has parallels with the question "what is consciousness?":

- Is a X/twitter bot an agent if it just spams users when they mention a keyword?

- Is a virus an agent? Is a single viral cell?

- If an agent is triggered by pushing a button, is it still an agent? or just a function in an application?

This is hard to resolve, but a useful engineering definition is that external triggers are allowed (from humans, from other agents, from the environment) as long as the system in question has some kind of internal logic that enables it to determine if it should execute the requested behavior. This could be anything from a complex evaluation of the chances of success of an action to a simple safety or identity check before performing an action.

It's also important that triggers often don't result in immediate action being taken. A security scanning system, for example, may passively gather information for long period before suddenly activating to thwart a perceived breach.

Unix daemons were agents before there were agents.

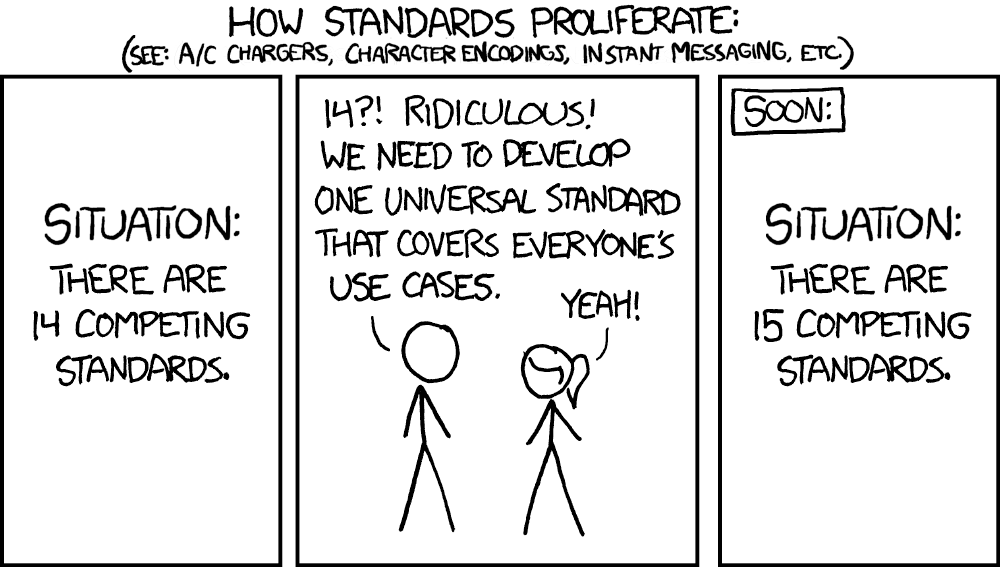

So now we have the 15th Agent Definition?

Great, so now we have a new definition of "Agent." What did we gain? There will no doubt be many new definitions over time, and it doesn't matter which one wins, but it's helpful to think about the implications of a definition for systems.

The reason I prefer a simpler definition like the one is in the previous section is that it focuses attention on a few important things about agent systems. In particular:

- Autonomy really matters. Whether it's a long-running For-loop, or a system that can be triggered by some set of environmental conditions (a security scanner), agent systems are asynchronous, and they can pop up and act almost at any time. If you are building long, complex workflows, think hard about whether you really need agents. If you're building a system that reacts to environmental change where processes contribute but aren't tightly coupled, agents may be what you want.

- Environment really matters. It's important to think about agents as embedded in an environment. For example, a system that can take powerful and varied actions and has access to nearly complete personal data has a lot of security implications to manage. Apple has probably learned this over the last six months resulting in the delay of its much-trailed upgraded Siri AI.

- Environment X abilities = Power. I love the idea of "tools" for agents (such as exa.ai and browserbase.com). This infrastructure makes the open web the environment agents can operate in. It's the power of the tools and the "power" of the environment that determine the potential range of outcomes, but also the risk of erroneous actions.

- Goals can come from anywhere. Some may be hard-wired; some may be random. When designing agents, it is really important to ensure all the possible sources of goals are accounted for and well managed. Is the agent searching the web for information and building plans on what else to search? To what extent does the incoming information form new goals? Could that run and run using unlimited compute?

- Many "multi-agent" systems are really single-agent systems. Many of the mentions of multi-agent systems that we've seen in the last few months are really chains of systems that fully determine actions in the next step or collections of systems that each generate a result so the best can be chosen. Neither of these architectures really corresponds to a multi-agent system. For a system to be a multi-agent system, each agent needs to be autonomous. In other words, it would need to have its own goals and some criteria on whether to achieve those goals or not. In the broadest sense, the system is not truly multi-agent until each "agent" serves a different owner (which could be itself). There's nothing wrong with architectures that decompose activities between subsystems, but until you have the potential for systems to conflict with each other you are arguably building a single agent system.

- Agents in a multi-system interact via their environment (whether you like it or not). Those conflicts happen more quickly than you think. Even if agents are not directly in message contact with one another, they can interact. Any scenarios in which multiple agents can interact with the same resource can mean interaction between agents (e.g., two code modifying agents working on the same code base, two scheduler agents scheduling jobs on the same server cluster, or fifty search agents writing to the same database). These interactions require coordination (explicit or implicit) and need to be accounted for.

To agent or not to agent, that is the question...

Given that a simple-sounding topic turned into a 2000+ word blog post, you might be forgiven for thinking that it's best to stay away from agents altogether. Or maybe it's that the "agentic" hype is just too much to deal with.

Both of those sentiments are understandable, but you probably can't escape. As soon as you begin to build AI systems that can "act" in some environment, you're well on the way to building an agent. Once you go down this path, and especially if you add a dash of autonomy (long-running process, trigger rules, ...), it's worth thinking of your system as an agent.

Looking at the system design, you can start to be explicit about what the environment is, what actions/tools are available, how decisions are made, and so forth. All of which will generally lead to better system design.

Happy #AgentEngineering!

Some Further Reading

- I like Sean Wu's post on three agent definitions.

- Techcrunch on Agent confusion "No one knows what the hell an AI agent is".

- The Agentlink Technology Roadmap published in Sept 2005. Everything you needed to know about agents in 2005.

- Swyx on the Latent Space blog (hat-tip Sam Ramji): https://www.latent.space/p/agent