Dispatch from the AI Engineer Summit Day 2: More agent definitions, sidestepping model costs, and dialing agents

Day 2 of the conference with more great speakers and fun insight's

The AI Engineer Summit wrapped the main content sessions on Friday with some great talks throughout the day. Overall, if there's one theme, it's that whatever ML model you're building on, that's only 20-30% of what you need to consider. In the end, delivering an AI/ML-powered application to users requires rock-solid end-to-end systems. Following up on yesterday's Day 1 post, a few of the key ideas from throughout the day:

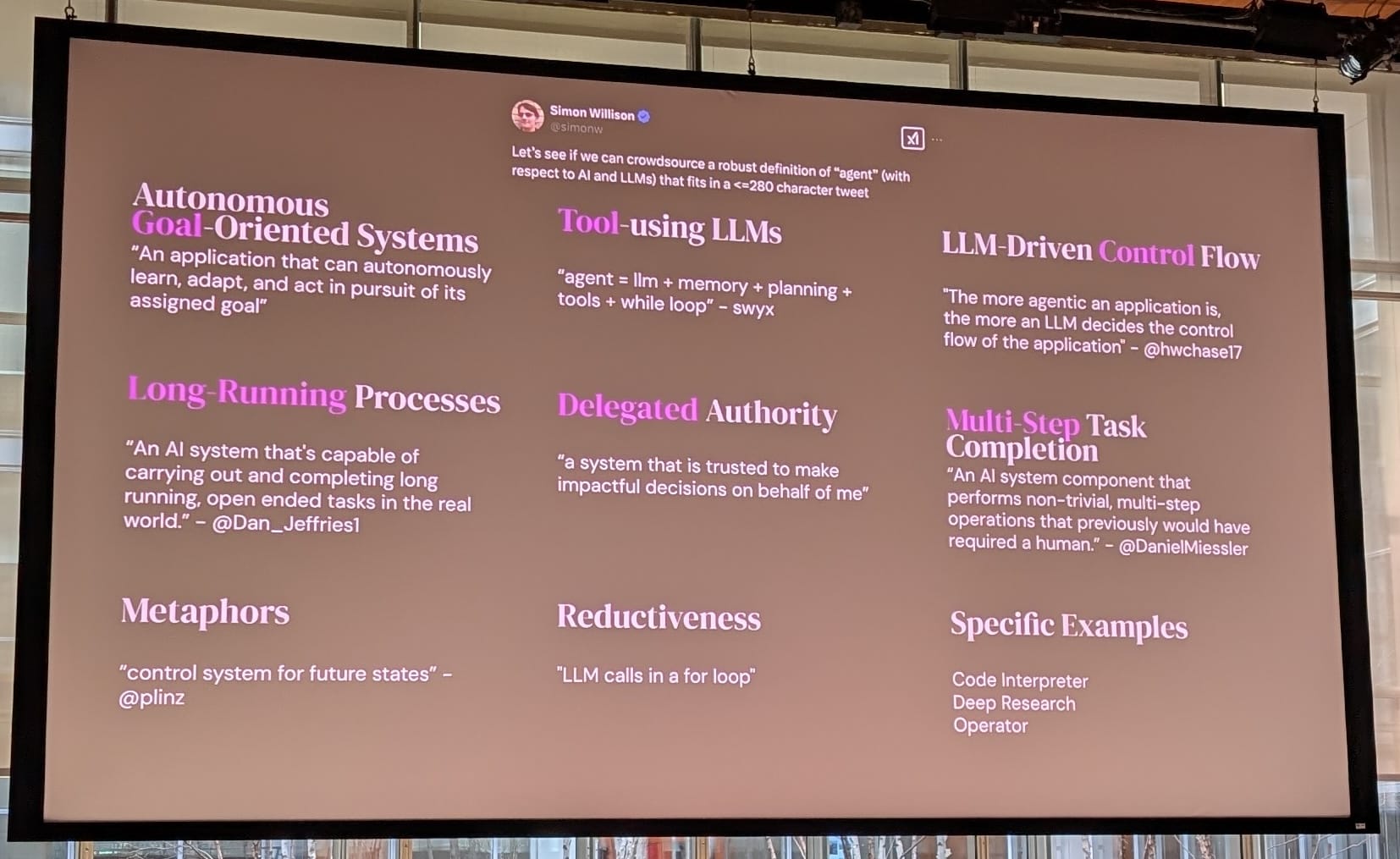

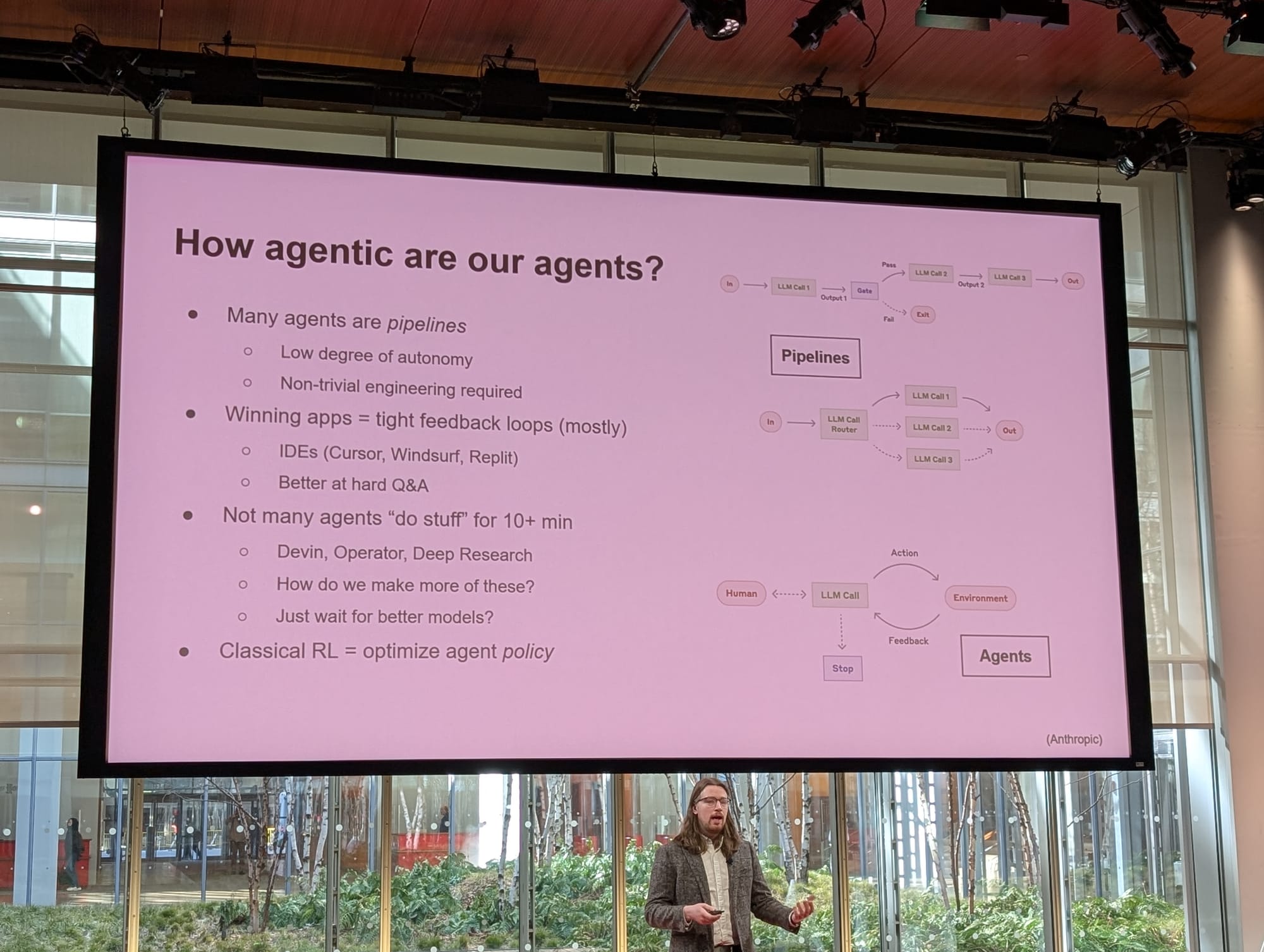

- Agent definitions. Inevitably, the Agent definition topic came back with a vengeance. Swyx presented six in his opening talk. My take: autonomy still missing in almost all of them. (The way to see that autonomy is key is to think about the fact that most useful agents will need to act in environments that also include other agents that are also able to act.)

- Task and Skill learning v's Environments. Will Brown, gave a great talk on what agents (models) are really learning in current training methods. He covered his GRPO framework and how the learning cycle is focused on optimizing for change in the environment. This in turn, means changing the reward functions. Learning like this looks a lot more like what AlphaGo did than today's LLM training.

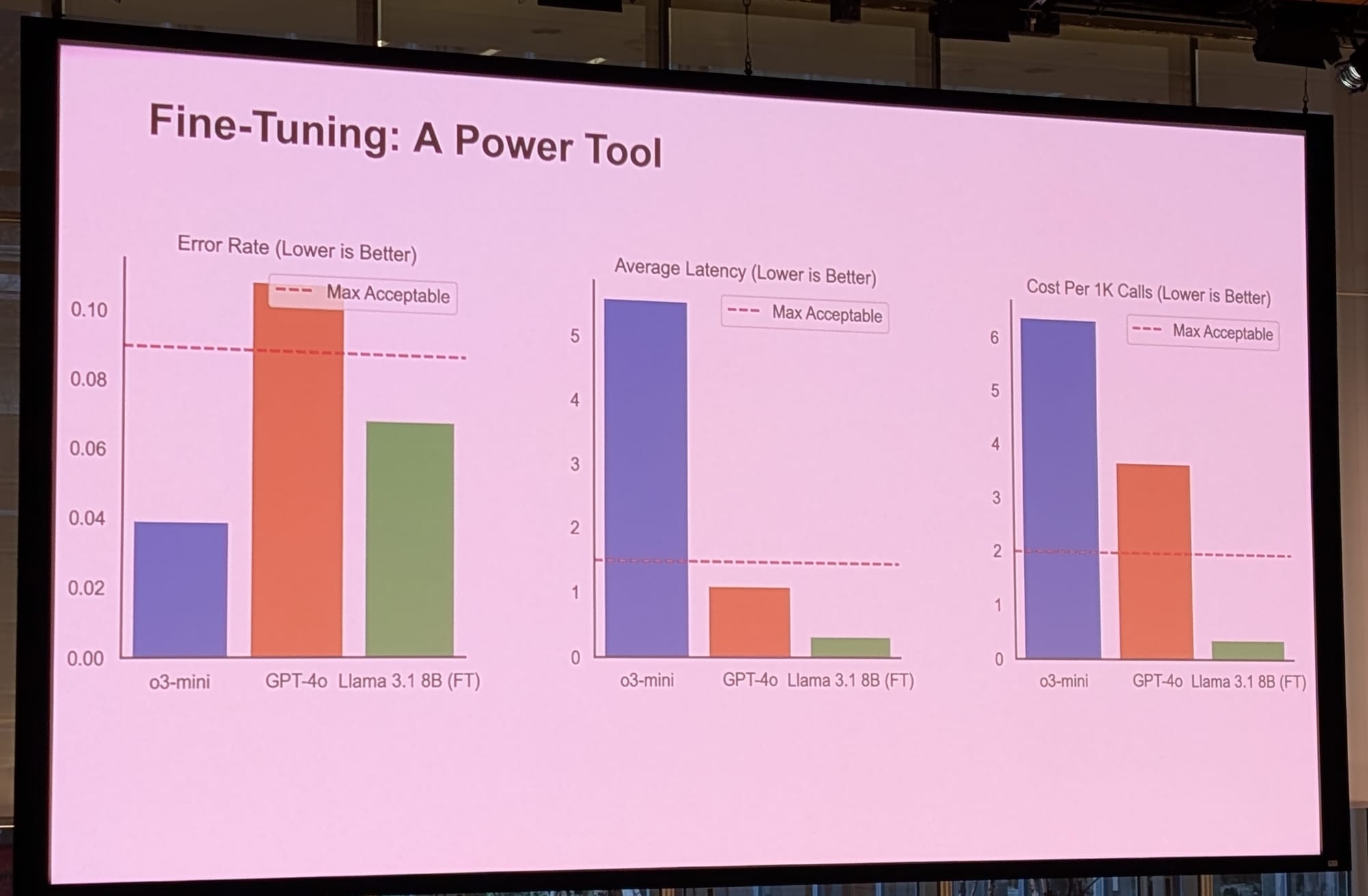

- Business model conundrums. The talk given by Mustafa Ali (Method Financial) and Kyle Corbitt (OpenPipe) contained the single most important slide of the whole conference. Building high-volume apps on top of frontier models is highly challenging due to trade-offs in latency, error rate, and cost. But, by using the frontier models to first validate the use case, understand the majority of user queries, and map out the space, you create the conditions to create a much smaller fine-tuned model based on open source. The smaller model is much cheaper to run, can be co-located with your application code (for low latency), and may well get to best-case error rates. This emerging best practice is a great approach for everyone involved, except the frontier model providers, who end up being used only at experiment/training time and then being cut out of the revenue loop later on. Multi-billion dollar business model conundrums.

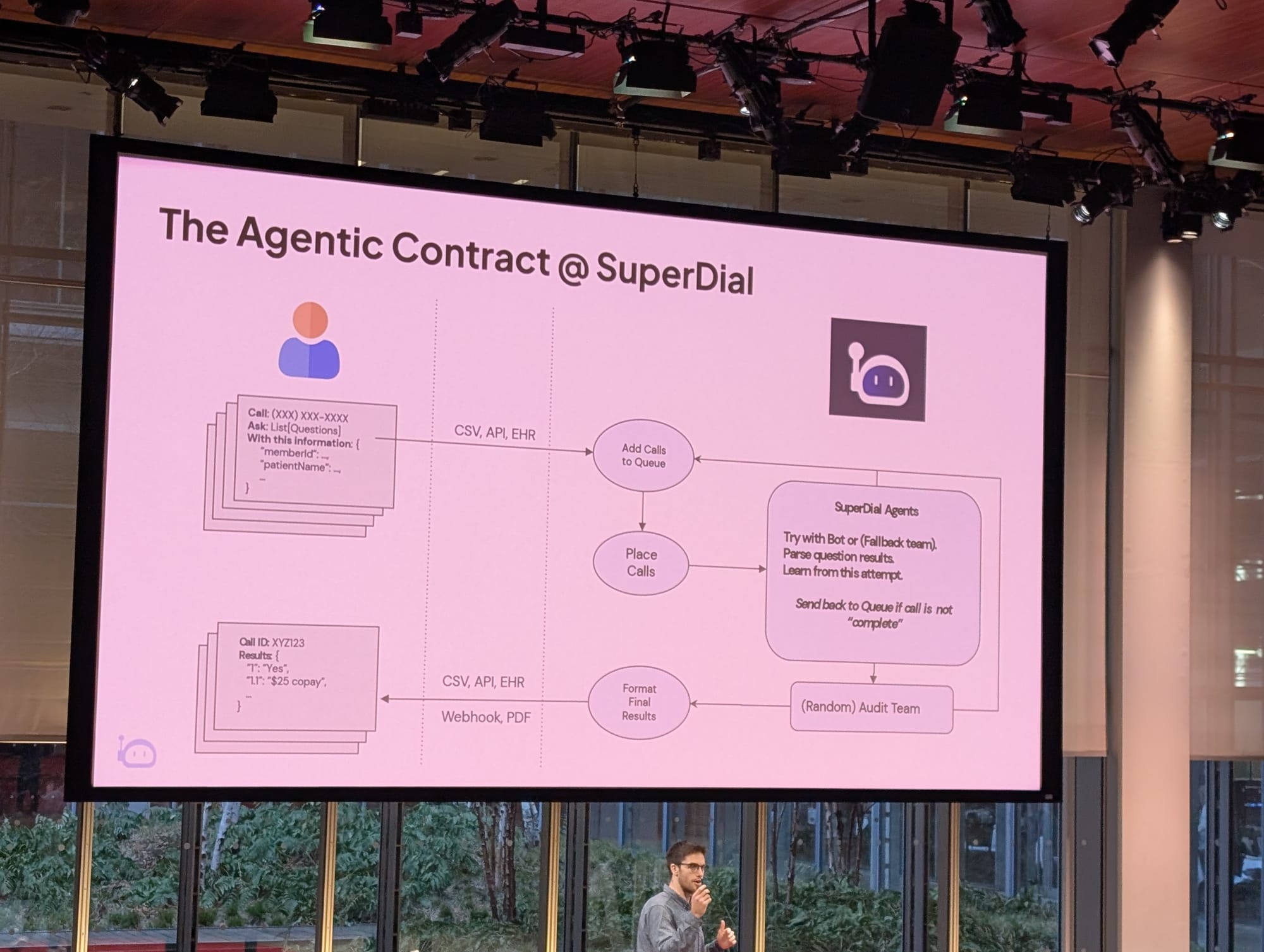

- Users strike back, and (maybe) voice wars ensue. Fed up with wading through phone holding patterns, increasingly automated phone menu option trees, and (now) talking to company AI agents on the phone when you need something from your bank, insurer, government, or someone else? Superdial is an example of a company that is taking the other side. Their agents dial on behalf of the user and take on lengthy administrative phone calls. The current target application is calling health insurance providers in the United States to get coverage information. The LLM-based application calls the service provider and interacts with automated systems and humans. If things go wrong, a live agent takes over and completes the call. The stage demo recording of the agent talking to a real insurance representative was as impressive as it was eerie. It is hard not to feel empathy for the humans on the service provider side now receiving robocalls that they have to answer. It's possible that the AI callers will be easier to deal with than humans, but it can't be long before these are simply AI-AI conversations.

- The next generation of AI Engineers. Stefania Druga's talk covered a wide range of coding and learning tools to support children in discovering how to build AI systems. it struck me how much some of the scenes echo the 100s of SciFi movies where a child protagonist has an AI sidekick or robot of some kind, and they mutually support each other's actions. Children growing up today seem likely to have a radically different technological experience to ours.

That's a wrap for the event for me. It was a fantastic crowd and a great set of speakers. Lots of challenges to think about!

Props to to the AI Engineer team for putting on a really great event!