Friday Links: AI Fairness, EU AI Law Advances and Jailbreaks

Here are this week's five links. If you're ready to have LLMs linked directly to your brain, then Elon Musk has something for you...

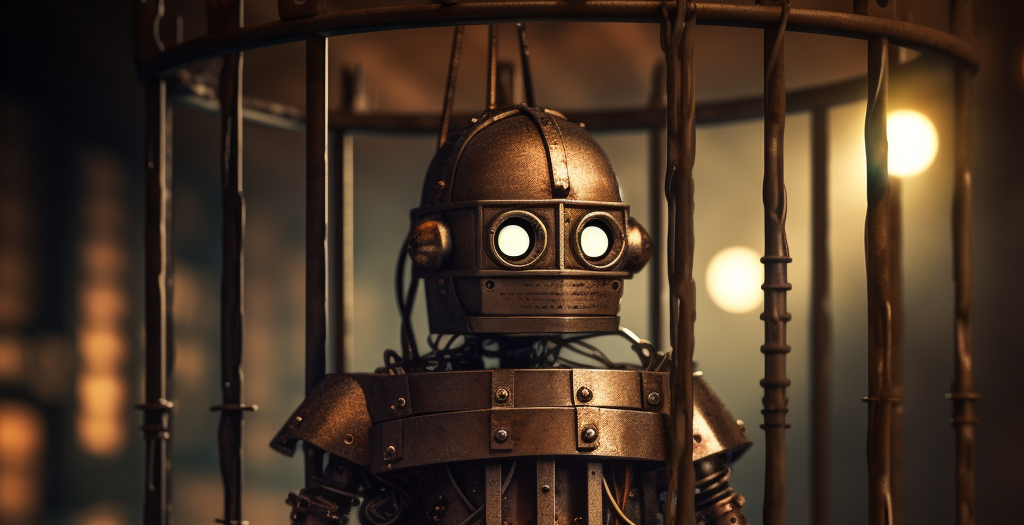

- AI Tidbit's methods for securing LLMs from Jailbreaks: this is a useful concise overview, but more than anything, it serves to highlight how easy it is to get LLMs to do things their operators don't want them to. These powerful systems are extremely difficult to secure (one could argue impossible) since you effectively need an LLM even more powerful than the one you have to assess the danger of the incoming prompts. Humans have the same system: when you react to a situation, and you're about to blurt out something unwise, some higher-level cognitive function kicks in and stops you from doing it, but only if you're lucky.

- The inherent limitations of AI fairness. This is a super useful review of AI fairness techniques and what they might / might not improve (hat tip, Alessio, for this one). Two standout points for me: 1) some techniques clearly help for certain use cases, but it will be impossible to apply a universal definition of fairness to all cases (indeed, there cannot be one, and that may even true for specific applications), and 2) the overall difficulty of the approach can lead some organizations to claim fairness despite not putting in significant effort. Lastly, a positive aspect of AI fairness discussions is that they emphasize our existing human processes, which are often much less fair than we would like them to be.

- Neuralink has implanted its first chip in a human brain. The implant trials were approved in September 2023 and are for Telepathy, which aims to allow a user to control a phone or computer with thought alone. The first applications are for patients who have lost the use of their limbs. The trial detected neural activity and is, hence, a first step towards learning the signals that correlate to real-world actions.

- EU countries give assent to Artificial Intelligence law. There's now a final agreement not to have special carve-outs for the largest models. I'm not sure that carve out made sense, but there's certainly a risk this part of the AI law limits innovation in the EU. In my opinion, powerful open source models are extremely important, ensuring we get an AI that is equality enhancing v's inequality enhancing. Regular should really focus on the use of technology and not the technology itself.

- OLMo - An Open Source LLM with open data, weights, checkpoints, and source code. This is a very cool release from Allen Labs (a non-profit). Having a model available that isn't only useful but also includes the training data and steps is hugely helpful in understanding how LLMs get built.

Wishing you a great weekend!